Why YouTube was wrong to remove PewDiePie’s “Coco” diss track

YouTube: PewDiePie

YouTube: PewDiePieAfter years of allowing diss tracks on the platform, YouTube has deleted PewDiePie’s new parody song, Coco, which was aimed at children’s channel Cocomelon. In my opinion, Google’s move exposes its own policies as broken.

Diss tracks have been a staple of the YouTube community since they exploded in popularity in 2017. Since then, the musical trend has become a parody of itself with every influencer and their mom dropping an overly produced song to start a “beef” with someone.

The site’s largest individual creator, PewDiePie, reignited the format with his hilarious 2018 hit B**ch Lasagna, which comedically took aim at subscriber rival, T-Series. However, the Swede’s latest release, Coco, has “crossed the line” according to Google – and they couldn’t be more wrong.

YouTube: PewDiePie

YouTube: PewDiePieGoogle deletes PewDiePie’s viral ‘Coco’ diss track

On February 18, Coco was mysteriously removed from YouTube after racking up over 10 million views. The satirical diss track made its debut just a week earlier, and was made in jest against children’s channel Cocomelon.

Yes, you read that right. Over the last year, Kjellberg and his fans have joked about the kids’ content creator as being a serious rival as their subscribers were quickly set to surpass him. The “beef” was a complete joke, however, and the 31-year-old has even praised them for their success on numerous occasions.

Coco was 100% a satirical take on diss tracks, and even used the nursery rhyme format for comedic effect. Dressed up like Mr Rogers, Pewds danced around a classroom while spitting lines that would ruin any kid’s childhood like “Santa isn’t real,” and “I’d spoil Harry Potter for you, but J.K. Rowling already did that.”

Point being, the whole song was a joke, and almost the entire community took it that way – except Google. On February 18, the company deleted the video claiming it was “child endangerment” and “harassment” of another creator (in the song, he also takes jabs at 6ix9ine, using the millionaire rapper as a joke punching bag).

YouTube doesn’t know its own policies

The decision is nonsense. The video was delayed for months due to the YouTuber taking extra steps to adhere to child safety regulations for filming. The talented kids in the song were actors and they had their parents on set, who consented. Let me also let you in on some movie magic: the kids didn’t actually hear any swear words or foul language, as a censored version of the track was played and Kjellberg was lip-syncing.

Google, however, argues that the song still violates their policy because it’s meant to look like children’s content with inappropriate material. But herein lies the problem – in 2018, after YouTube faced several scandals with disturbing content passing off as made for kids, the platform installed safety features and even created a children’s version of the site.

Coco was not marked for kids. In fact, it was marked specifically for adults and labeled as “not safe” for minors. Kids shouldn’t have been able to view the upload in the first place. So is YouTube admitting that their safety features don’t actually work? And what does it even mean to “look like children’s content”?

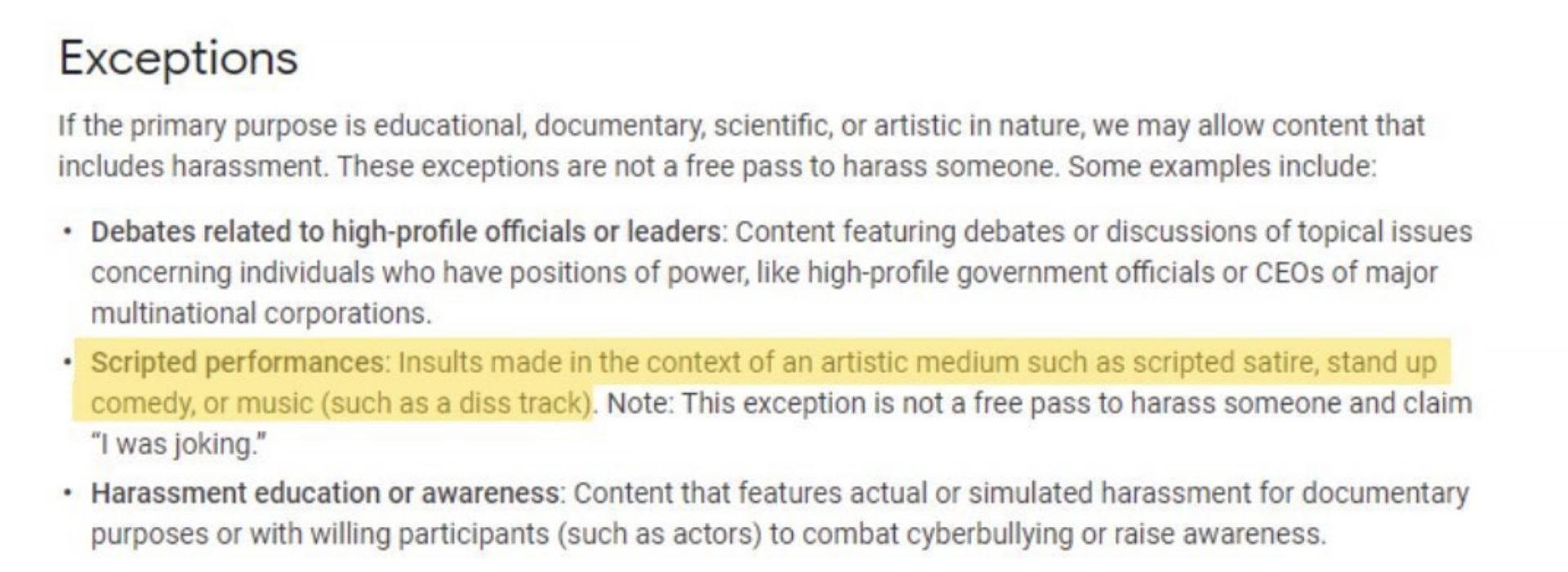

(1/2) This video violated two policies: 1) Child safety: by looking like it was made for kids but containing inappropriate content. 2) Harassment: by inciting harassment @ other creators– we allow criticism but this crossed the line. Specific policy details in the image below. pic.twitter.com/LmqRU2EZWH

— TeamYouTube (@TeamYouTube) February 19, 2021

In 2019, hit rock band Weezer released their single “High as a Kite.” Like Kjellberg, lead singer Rivers Cuomo dressed up like Mr Rogers. What starts out as a kids’ show turns into a drug-induced nightmare of children screaming and crying (the kids in Coco are having a great time in comparison). Is Google going to pull down Weezer’s video using their logic?

The last claim YouTube makes is that the video violates its harassment policy. Assuming they are talking about 6ix9ine, PewDiePie jokes that he wants a legal fight. What about other content creators such as Jake Paul or KSI? You know, diss tracks that led to pay-per-view boxing matches. But that’s fine, right?

If PewDiePie’s video is in violation of the site’s rules then, in my opinion, Google is admitting its platform’s safety measures for children do not work and that its own policies are a failure.

In fact, speaking of policy, YouTube’s own guidelines state that satirical forms of trash talking other creators such as diss tracks are not only protected, but are not considered harassment. So how does this make any sense?

Not only has the viral song been swiftly banned from all corners of the platform, but the company also sent out stern warnings to other channels to not upload even clips of it, or else face punishment.

YouTube

YouTubeThe decision was inevitably met with backlash from users and fellow content creators who couldn’t help but point out that YouTube doesn’t even seem to know its own rules. It’s also just bad optics. PewDiePie is the platform’s biggest content creator whether they like it or not.

After years of a tense relationship with the Swede, Google seemed to have finally accepted his popularity after signing a major streaming exclusivity deal with the 31-year-old. A year later, they are deleting his viral video and can’t even adequately explain why. Everyone except YouTube seems to be in on the joke, so it’s time for them to decide – do they like him or not?