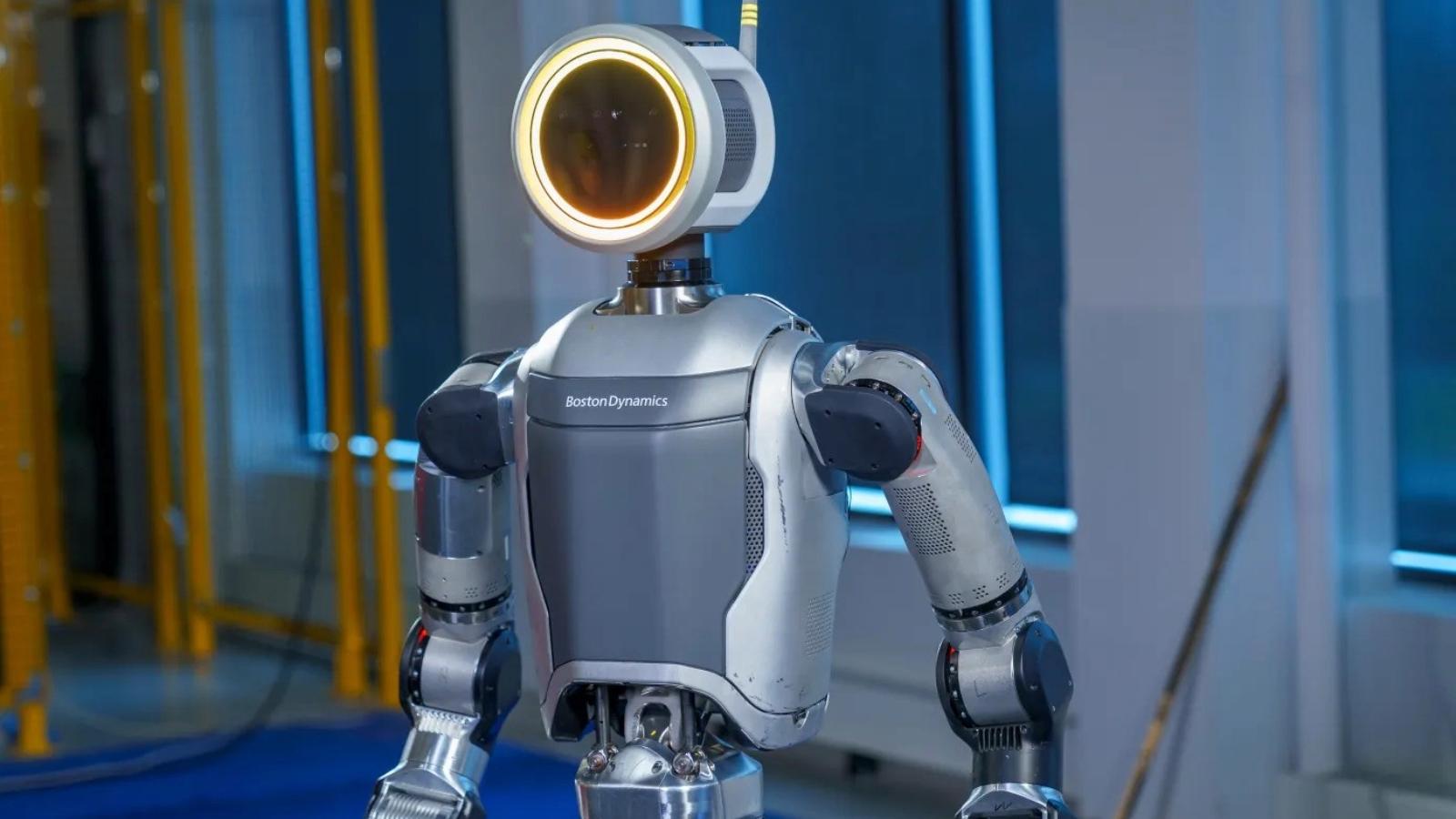

Hundreds of ChatGPT jailbreaks for sale in “shadow forums” amid AI crime boom

ChatGPT has massively disrupted the modern Tech landscape, but with new AI technologies, come new vulnerabilities, as Kaspersky discovers in a new report.

The ChatGPT and AI craze seems to show no signs of stopping, with CES 2024 showcasing dozens of new devices focused on AI. But, one of the biggest AI applications on the planet, ChatGPT, has been found to house several vulnerabilities that let you jump over its built-in safety guardrails, as a new report from security firm Kaspersky (via The Register) has shown.

Between January and December of 2023, reports show a boom in using GPT to create malware, which executes on the ChatGPT domain. While Kaspersky notes that no malware of this kind has yet emerged, it remains a threat in the future.

It also showcases how ChatGPT can be used to aid crimes, with ChatGPT generating code to resolve problems that arise when stealing user data. Kaspersky notes that ChatGPT can lower the barrier of entry to users looking to use AI’s knowledge for malicious purposes. “Actions that previously required a team of people with some experience can now be performed at a basic level even by rookies.” Kaspersky notes in its report.

Jailbreak prompts bought and sold

Freepik

FreepikChatGPT jailbreaks are nothing new, such as the “Grandma” exploit that gained notoriety last year. However, many of these exploits have now been plugged up by OpenAI. But, They still exist, and they’re on sale, if you know where to look. Kaspersky’s report alleges that during 2023 “249 offers to distribute and sell such prompt sets were found.”

These jailbreak prompts are allegedly common, and found by users of “social platforms and members of shadow forums”. However, the security firm notes that not all prompts are engineered to perform illegal actions, and some are used to get more precise results.

The report continues to state that getting information from ChatGPT that’s usually restricted is simple, just by asking it again.

The report continues to state that ChatGPT can also be used in penetration testing scenarios, as well as using the basic LLM in “evil” modules that seek to harm, and are focused on illicit activities.

AI crime boom

The number of “dupe” ChatGPT or “evil” ChatGPT claims is on the rise, as they are only designed to phish and steam user data. This can be paired with the number of stolen ChatGPT Plus accounts being sold illicitly on the dark web. The number of AI-related posts peaked in April 2023 but remains of ongoing interest to users of malicious forums. Kaspersky ends its report with a stark warning:

“it is likely that the capabilities of language models will soon reach a level at which sophisticated attacks will become possible.”

Until then, just remember to keep your personal details as safe and secure as possible.