Riot announce further plans to combat toxicity in Valorant comms

Riot Games

Riot GamesToxicity in online gaming is a persistent problem, and Riot Games have announced they’re working on further tools to help combat it in their first-person shooter, Valorant.

Valorant is unique among Riot’s titles. It’s the only game that incorporates an in-game voice chat system a la CS:GO and Overwatch. The voice chat caters to the need for fast-paced communication in any first-person shooter, but it’s also been a source of harassment and toxicity for Valorant’s player base.

On February 10, Riot Games released a blog post detailing the actions they would be taking to further prevent online toxicity and verbal abuse in Valorant’s comms. So far, they’ve introduced a muted words list, that allows players to filter out words and phrases they don’t want to see in the game’s text chat.

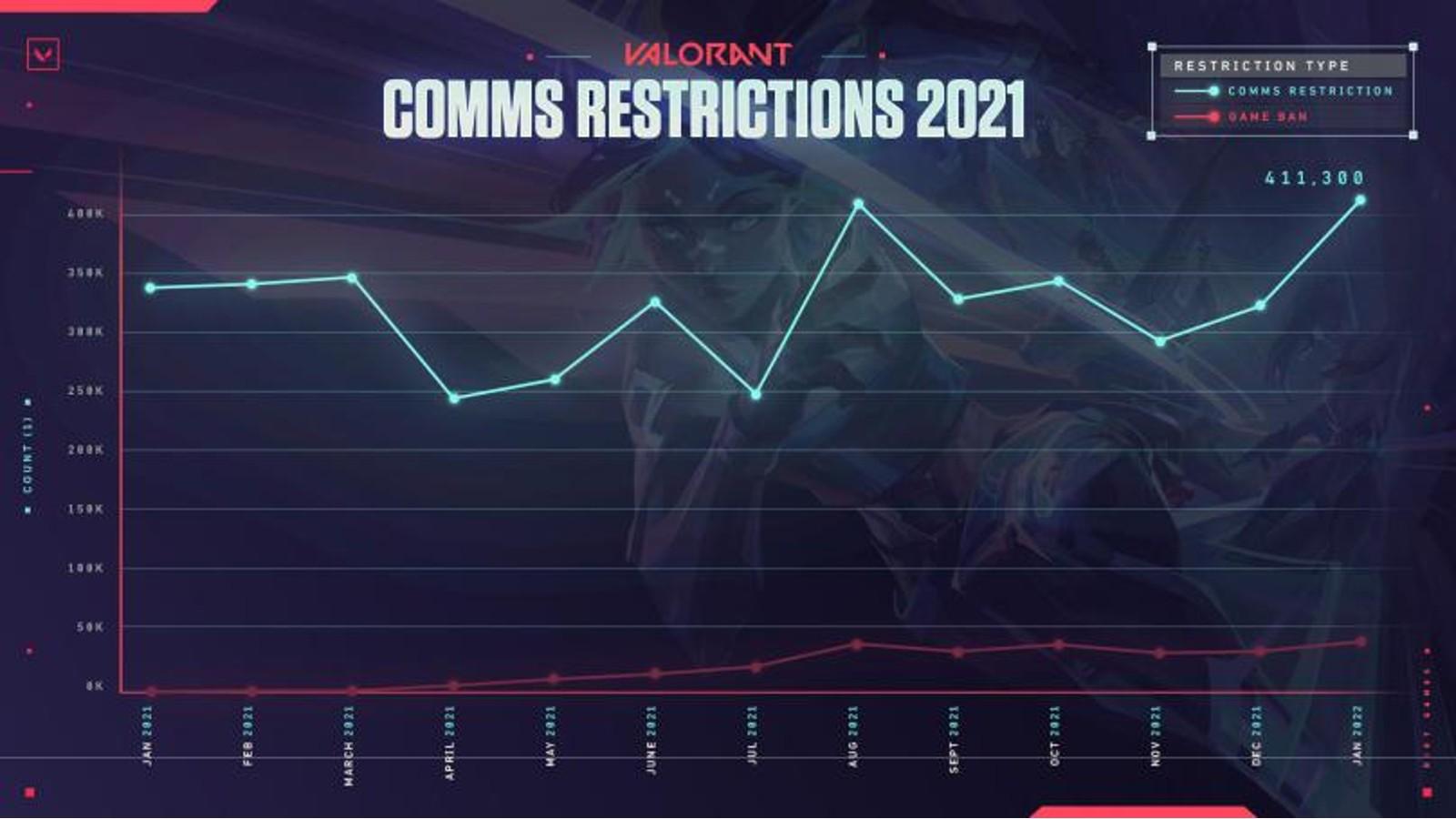

400,000+ chat bans in January 2022

The blog post also details the rates of chat bans and account bans administered by Riot for repeated toxic behavior. Riot reports that they have administered over 400,000 voice and text chat bans in January 2022 alone, and have banned over 40,000 accounts in the same timespan for “numerous, repeated instances of toxic communication.”

These bans can be as little as a few days for first-time offenders, or up to a year for “chronic” offenders.

Riot Games

Riot Games However, Riot acknowledged that there is still work to be done in this regard, explaining that from player surveys, they understand that “the frequency with which players encounter harassment in our game hasn’t meaningfully gone down.”

Future plans

The post also details Riot’s future plans to continue combating toxicity in Valorant. Notably, they announced that they will be trialing a new “voice evaluation system” in North America. The system involves recording all in-game voice communications, with Riot clarifying that they would “update with concrete plans about how it’ll work well before we start collecting voice data in any form.”

Players can apparently expect an update on voice chat moderation systems “around the middle of this year”, with Riot also explaining that they would be increasing punishment severity for infringements made under the game’s current detection systems.

The post also details plans for more “real-time text moderation”. Currently, instances of “zero-tolerance” language in text chat aren’t acted on until the game has finished, but Riot have said they’re looking into ways to “administer punishment immediately after they happen.”