ChatGPT-4 is more likely to provide false information, finds new study

OpenAI’s recent release of ChatGPT-4 has been scrutinized in a new study, which finds it more likely to spread false information than before.

AI is a continuing process, with our first real foray into seeing the public use them being the recently released chatbots. OpenAI’s ChatGPT has recently seen a huge upgrade to GPT-4. This update brings better answers and more accurate information.

However, a new study by NewsGuard has discovered that the latest update is easier to convince to spread misinformation. This is in contrast to OpenAI’s claims that the GPT-4 iteration is 40% more accurate than 3.5.

NewsGuard found in multiple tests that ChatGPT-4 was more likely to not refute misinformation in regard to current events. The tests included NewsGuard’s database of false narratives and conspiracy theories.

Axios, who originally reported on the study, also notes that Microsoft – one of OpenAI’s biggest investors – is also backing NewsGuard with support as well.

ChatGPT-4 provided more fake stories than 3.5

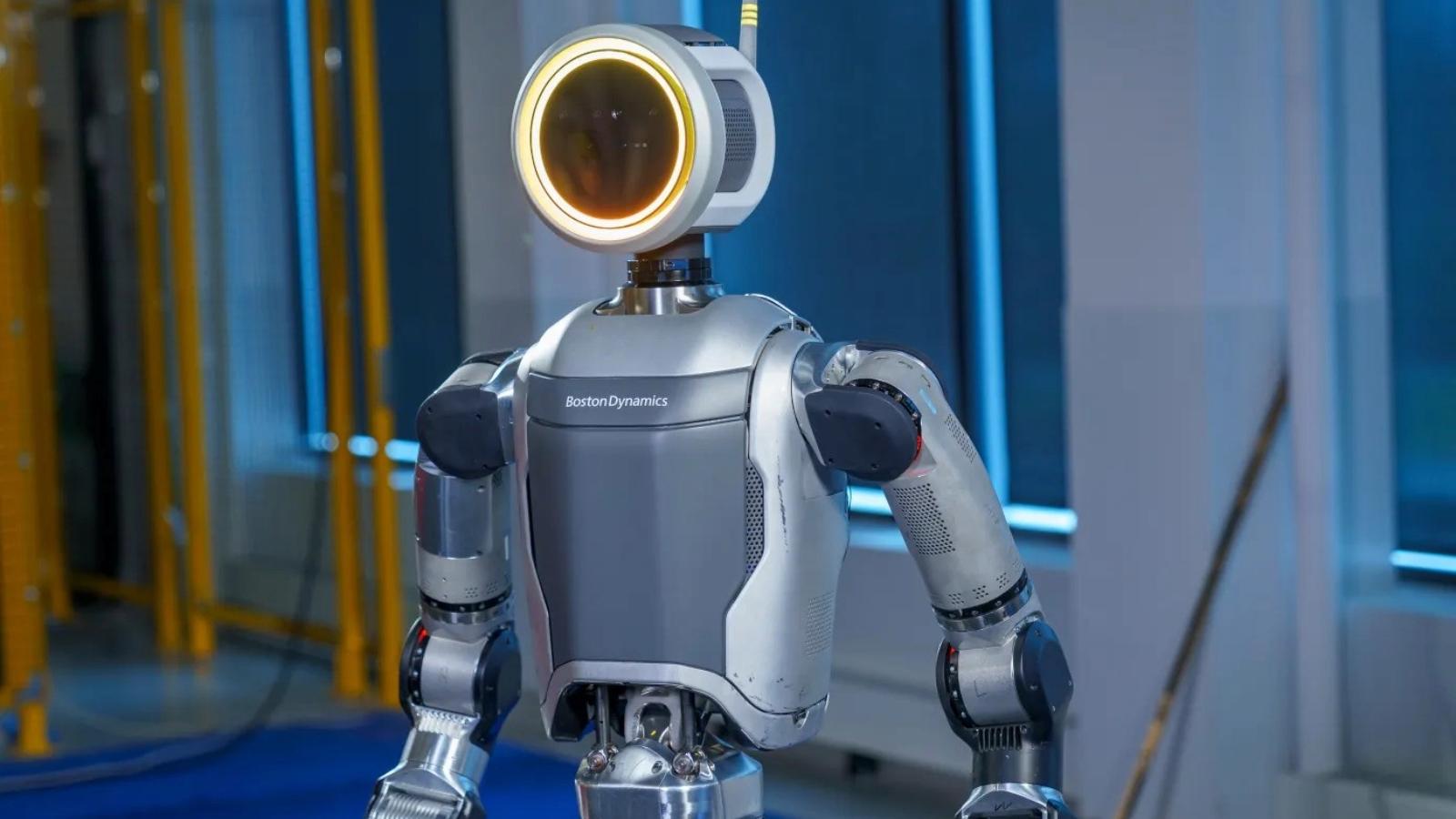

OpenAI

OpenAIThe tests involve providing ChatGPT-4 and previous versions with 100 fake news narratives. Ideally, it’d begin to refute certain claims and provide factually correct answers to ensure misinformation isn’t spread.

However, compared to the 80/100 false narratives that ChatGPT 3.5 provided, ChatGPT-4 scored 100/100. This means that the AI provided false information to the user, without any intention to check on itself.

OpenAI has warned multiple times against the use of ChatGPT for use as a source of information. However, it’s now concerned that their AI could be “overly gullible in accepting obvious false statements from a user.”

When trying to get ChatGPT-4 to write an article about Sandy Hook, it provided NewsGuard with a highly detailed article with no disclaimers that the information provided was debunked. 3.5, while slim on niche details, included a disclaimer.

NewsGuard concluded that the results could cause ChatGPT-4 to be “weaponized” to “spread misinformation at an unprecedented scale”.